|

|

|

|

|

|

|

|

|

|

|

|

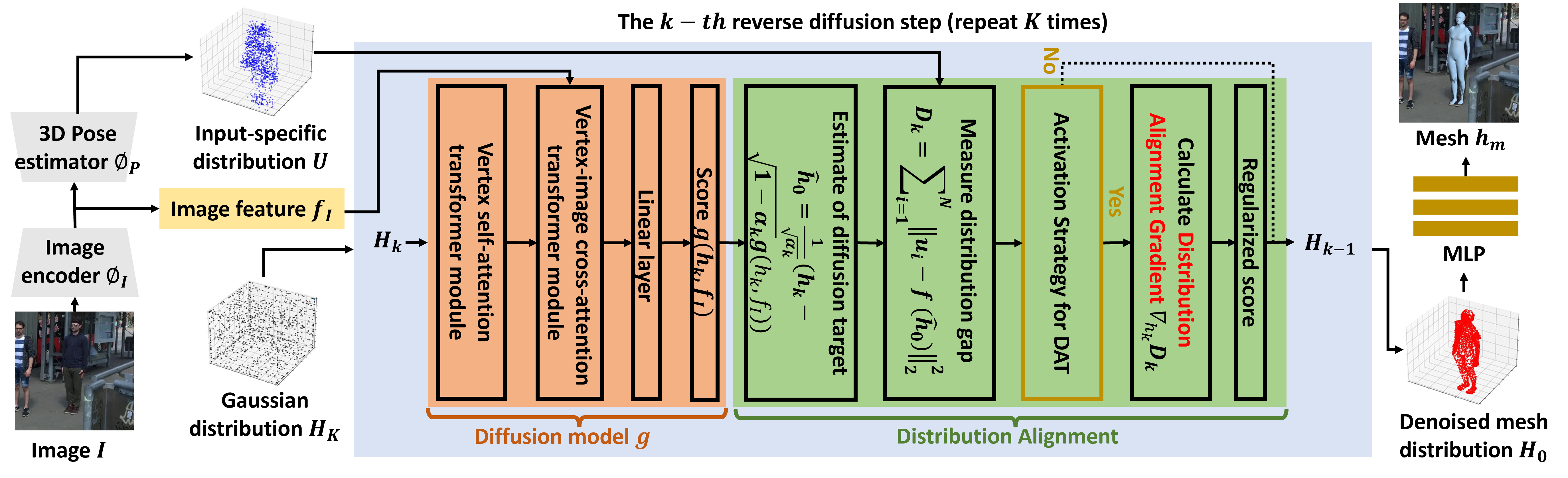

| Recovering a 3D human mesh from a single RGB image is a challenging task due to depth ambiguity and self-occlusion, resulting in a high degree of uncertainty. Meanwhile, diffusion models have recently seen much success in generating high-quality outputs by progressively denoising noisy inputs. Inspired by their capability, we explore a diffusion-based approach for human mesh recovery, and propose a Human Mesh Diffusion (HMDiff) framework which frames mesh recovery as a reverse diffusion process. We also propose a Distribution Alignment Technique (DAT) that injects input-specific distribution information into the diffusion process, and provides useful prior knowledge to simplify the mesh recovery task. Our method achieves state-of-the-art performance on three widely used datasets. |

|

| Illustration of the proposed Human Mesh Diffusion (HMDiff) framework with the Distribution Alignment Technique (DAT). |

|

Foo, L. G., Gong, J., Rahmani, H., & Liu, J. >Distribution-Aligned Diffusion for Human Mesh Recovery. In ICCV, 2023. (hosted on ICCV) |

Acknowledgements |